Search engines are a very innocent and useful tool that has emerged when the web hit the world by storm. However did you know that these search engines such as Google, Bing, DuckDuckGo, and many more can be used for revealing sensitive information? First lets analyze how these search engines work. SERP otherwise known as Search Engine Results Page prompt you with the most organically relevant results based on your search query not including Paid Advertising. These result pages are determined by Crawling, Indexing, and Ranking however we will only cover Crawling.

Crawling- is the discovery process when the search engine sends out a team of robots known as crawlers to find new updated content. Content can vary such as a web page, an image, a video, pdf, etc. – content is discovered by links. The bots start out by fetching webpages and then follow links to those webpages to find new URLS. By link hopping the crawler is able to find new content and adds it to the index called Caffeine. Robots.txt files are located in the root directory of websites and suggest which parts of your site search engine should and shouldn’t crawl. However google allows us to modify this crawling process with advanced operators. The usage of these operators along with the google search engine is referred to as Google Dorking.

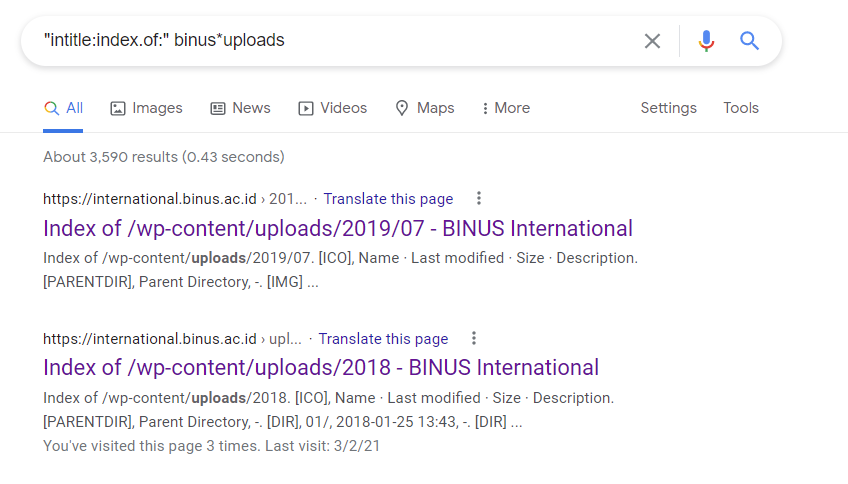

Google Dorking– is a hacker technique that uses Google Search and other Google applications to find security holes in the configuration and computer code that websites are using. Google hacking involves using advanced operators in the Google search engine to locate specific errors of text within search results. Here are some examples of Google Dorking our professor had us do for exercise.

Summary- as you can see google dorking and utilizing search engines can be very rewarding in finding information on the target and even finding points of interests for which routes to attack. We can go a step further in utilizing search engines with Shodan.

Shodan -is basically a search engine that crawls the Internet while Google and Bing crawl the World Wide Web. In essence Shodan gives you information about devices that are connected to the internet , These devices can vary tremendously such as small desktops, computer labs, etc. Shodan collects information from banners so it banner grabs the metadata about a software that’s running on a device. This can be server software information, services capability, etc. Using Shodan can reveal servers, ports, location, services, and even vulnerabilities.